Geneva: Large multi-modal models (LMMs) are a type of fast-growing generative artificial intelligence (AI) technology with applications across healthcare. The World Health Organization (WHO) today released new guidance on the ethics and governance of these LMMs.

The guidance outlines over 40 recommendations for consideration by governments, technology companies, and healthcare providers to ensure the appropriate use of LMMs to promote and protect the health of populations.

WHO consensus ethical principles for the use of AI for health

LMMs can accept one or more types of data inputs, such as text, videos, and images, and generate diverse outputs not limited to the type of data inputted. LMMs are unique in their mimicry of human communication and ability to carry out tasks they were not explicitly programmed to perform. LMMs have been adopted faster than any consumer application in history, with several platforms – such as ChatGPT, Bard and Bert – entering the public consciousness in 2023.

“Generative AI technologies have the potential to improve health care but only if those who develop, regulate, and use these technologies identify and fully account for the associated risks,” said Dr Jeremy Farrar, WHO Chief Scientist. “We need transparent information and policies to manage the design, development, and use of LMMs to achieve better health outcomes and overcome persisting health inequities.”

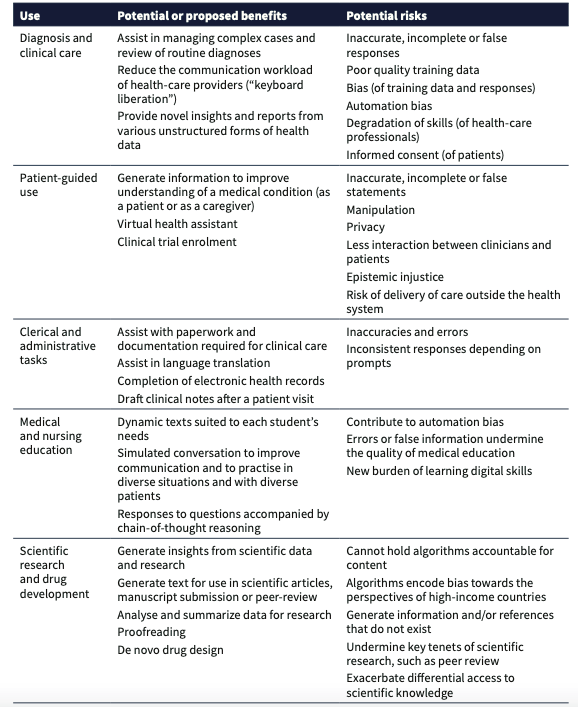

The new WHO guidance outlines five broad applications of LMMs for health.

Potential benefits and risks in various uses of LMMs in healthcare

The new WHO guidance includes recommendations for governments, who have the primary responsibility to set standards for the development and deployment of LMMs, and their integration and use for public health and medical purposes.

The new WHO guidance includes recommendations for governments, who have the primary responsibility to set standards for the development and deployment of LMMs, and their integration and use for public health and medical purposes. For example, governments should:

- Invest in or provide not-for-profit or public infrastructure, including computing power and public data sets, accessible to developers in the public, private and not-for-profit sectors, that requires users to adhere to ethical principles and values in exchange for access.

- Use laws, policies and regulations to ensure that LMMs and applications used in health care and medicine, irrespective of the risk or benefit associated with the AI technology, meet ethical obligations and human rights standards that affect, for example, a person’s dignity, autonomy or privacy.

- Assign an existing or new regulatory agency to assess and approve LMMs and applications intended for use in health care or medicine – as resources permit.

- Introduce mandatory post-release auditing and impact assessments, including for data protection and human rights, by independent third parties when an LMM is deployed on a large scale. The auditing and impact assessments should be published and should include outcomes and impacts disaggregated by the type of user, including for example by age, race or disability.

The guidance also includes the following key recommendations for developers of LMMs, who should ensure that:

- LMMs are designed not only by scientists and engineers. Potential users and all direct and indirect stakeholders, including medical providers, scientific researchers, health care professionals and patients, should be engaged from the early stages of AI development in structured, inclusive, transparent design and given opportunities to raise ethical issues, voice concerns and provide input for the AI application under consideration.

- LMMs are designed to perform well-defined tasks with the necessary accuracy and reliability to improve the capacity of health systems and advance patient interests. Developers should also be able to predict and understand potential secondary outcomes.

-global bihari bureau